Release at pace with test automation: What, why, and how to measure success?

Automation testing has been a buzzword to accelerate your software quality needs. I have seen in many C-suites that it is always seen as the only answer to releasing at pace. Though do we really understand the value it can add and also the risks around it? As it can easily eat your company funds if it is not implemented in the correct way.

In this article, I will be covering the basics and will be giving some tips so that you have a steady journey on the automation rollercoaster. Let’s go!

What is automation testing?

It is a technique where specialists use automation testing software tools to execute a test case suite.

Automation testing demands considerable investments of money and resources, and that is why it must be done properly.

Why is test automation beneficial?

From my experience, it is the best way to increase effectiveness, test coverage and increase the velocity by which we release.

Some statements around automation benefits:

- In some cases, it can be more than 70% faster than manual testing.

- It generally is reliable if done correctly and maintained smartly.

- Automation can be run multiple times and overnight.

- It reduces the chance of human error and improves accuracy.

- Automation testing helps increase test coverage.

Automation for me, has been a life saver, where we have not had the capacity or time to test manually. It has helped to catch defects which would have impacted our customers greatly.

My key advice is to run automation frequently, and the quicker the feedback — the better!

What should we automate?

Now, this is a really important question. We should not automate everything, especially if it leads to flaky tests.

6 cases to automate:

- High-risk areas — business-critical test cases.

- Test cases that are repeatedly executed.

- Positive test cases.

- User interface (UI) tests.

- Test cases that are very tedious or difficult to test manually.

- Test cases that are very time-consuming to run and create.

Example scenarios of what we ‘should’ automate:

- Compare two images pixel by pixel.

- Comparing two spreadsheets containing thousands of rows and columns.

- Testing an application on different browsers and different operating systems (OS) in parallel.

What should we NOT automate?

Now, the counter question, this is key and we should always have this at the back of our mind when automation test design takes place.

We should not automate:

- Test cases that are newly designed and not executed manually at least once.

- Test cases for which the requirements are frequently changing.

- Test cases which are executed on an ad-hoc basis.

- Negative failover tests.

- Tests with massive pre-setup.

- Test cases where the return on investment based on automation effort will take a long time.

The process of test automation

This should be planned out, and some time should be invested to think it through. Many times, I have seen automation fail due to flaky tests and people choosing to automate for the sake of automation.

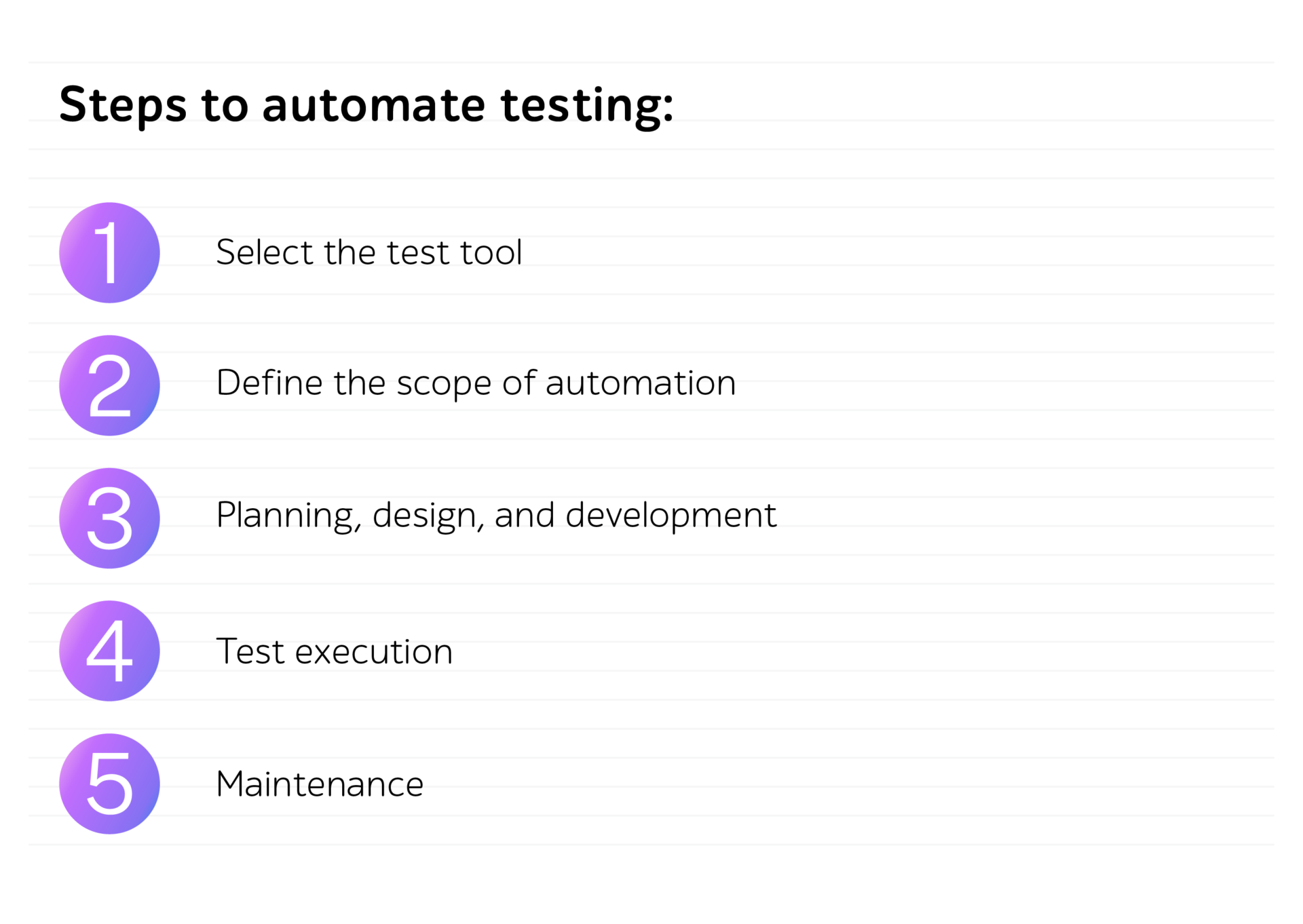

Here are the steps for automating your software testing:

1. Select the test tool — this is done via a proof of concept and involves many stakeholders so that the right decision can be made.

2. Define the scope of automation — facts to consider:

- The features — this should be clear

- Devise the scenarios which need a large amount of data

- Technology feasibility review

- Review the complexity of test cases

- Review whether we want to incorporate cross-browser testing.

3. Planning, design, and development:

- Automation tool selection

- Framework design and its features:

- The most popular open-source web framework is ‘Selenium WebDriver’

- Define your scripting standards, such as:

- Uniform scripts, comments, and indentation of code

- Exception handling

- User-defined messages are coded

- In-scope and out-of-scope for automation

- Automation test bed preparation

- Schedule and timeline of scripting and execution

- Deliverables of automation testing.

4. Test execution.

5. Maintenance.

The last phase of ‘maintenance’ is very key — as if the tests are not maintained, the technical debt is not brought down and those ‘flaky’ tests are not removed; you will actually find that automation testing is taking more time and money investment than manual testing.

How to measure the success of your automation suite?

So, we have automation in place. It is important to track success, and if success is not being met, if you have data to track, you can get back on the right path.

Some automation metrics I would recommend to measure:

- Percentage of defects found by automation.

- Time required for automation testing for each and every release cycle.

- Time taken for a release due to automation testing vs time taken if scripts are manually tested.

- Customer satisfaction index.

- Productivity improvement.

Conclusion

Automation is awesome, and it can really add to your QA capabilities, though it must be done and thought out properly.