The customer is a famous developer of “social games” that encompass a range of engaging online mobile games. Their availability, user-friendly design, and vibrant animations have made them highly popular among end users.

The customer embarked on developing their first mobile product with the goal of delivering a high-quality, user-friendly gaming application. a1qa specialists joined the project to ensure its success. As a result, the product was successfully launched and gained significant traction in the market. Following this success, the customer has entrusted a1qa with the quality assurance of subsequent iOS and Android applications.

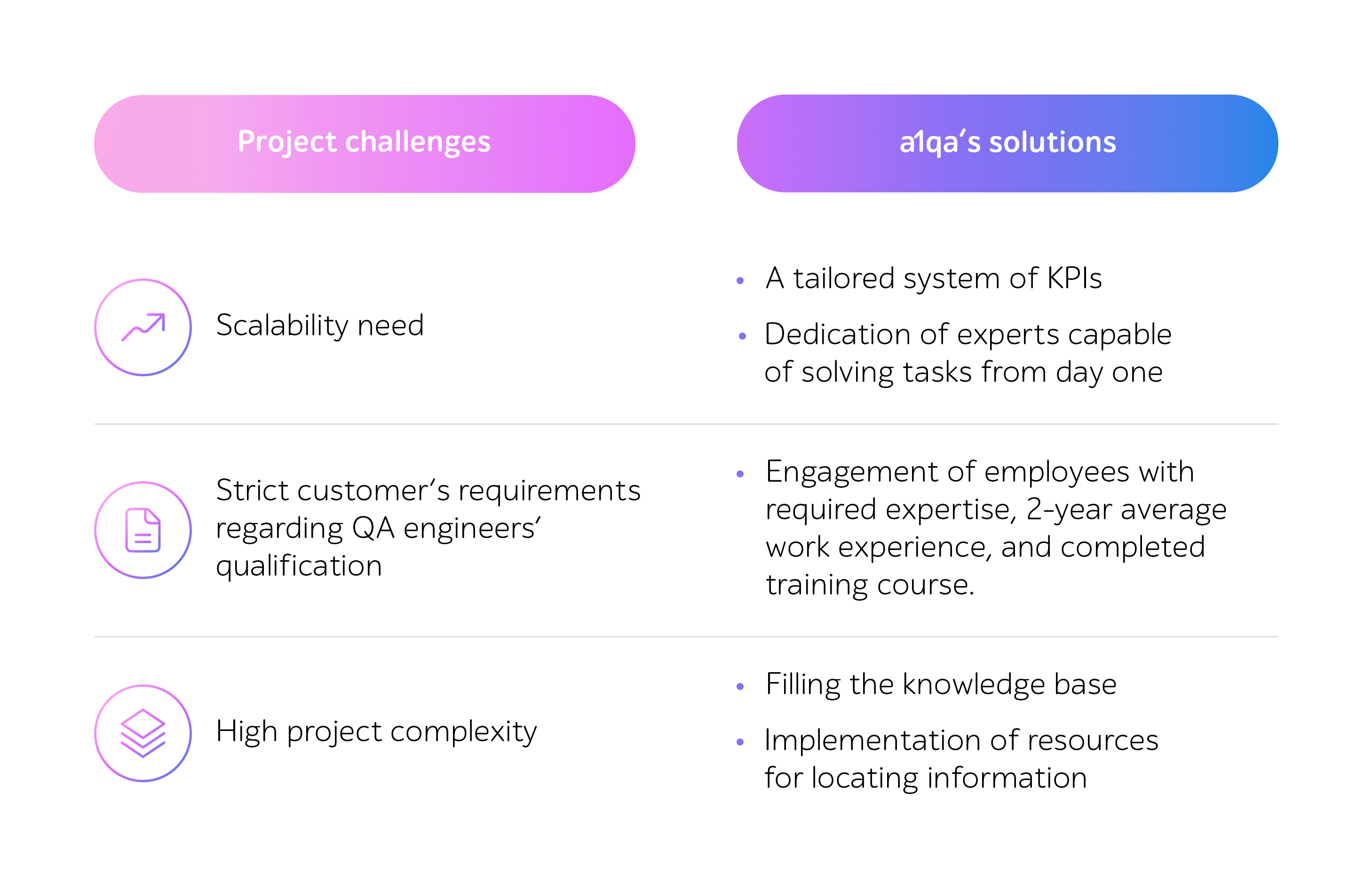

To perform QA activities, a1qa initially assigned 3 mobile testing engineers, who fulfilled software testing on the 8 most popular mobile devices. Over time, the scope of products increased, and a clear need to expand the team arose.

To successfully enlarge the team, a1qa has applied a step-by-step approach to scaling. The process includes accumulating and transferring knowledge through a customized training course. QA engineers also need to pass an exam to ensure their seamless integration into the project’s infrastructure and workflows. Currently, 87 experts across 4 dedicated QA teams conduct testing on more than 150 in-house devices. Each specialist focuses on a specific project area, such as back-end testing.

To ensure a strong initial rapport and confirm suitability for the project, the client valued the opportunity to personally meet with project candidates during interviews, facilitated by the well-established relationships and communication they formed with a1qa.

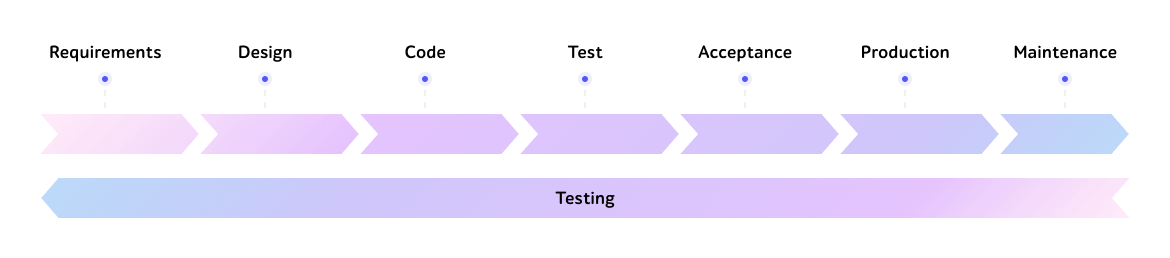

When a1qa joined the project, the team worked out a convenient schedule adapted to the customer’s working hours, smoothly integrated into the client’s infrastructure, and began performing the following high-priority QA activities.

Every year or when a project manager changes, a1qa performs a process audit to define possible areas for improvement or identify existing bottlenecks, continuously enhance the quality of services delivered, and make sure all QA teams align with set business goals.

Every few years, a1qa conducts a comprehensive technical audit to close any tech-related gaps, ascertain that test automation and performance testing solutions are efficient, and prevent data leakage.

Performed regularly, these activities help improve delivery quality and confidence in maintaining high-quality code.

The tasks of the teams vary depending on the project cycle. The engineers began by conducting front-end testing. As the engineers showed efficient performance due to vast QA expertise, deep understanding of the industry and client’s software, they have also been entrusted with back-end testing.

The engineers started by creating a test strategy to generate a guiding approach to ensuring software quality. They determined the most suitable test documentation type – test cases. As the back-end contains complex software logic and the client planned to automate testing, it was vital to describe every step of the testing process. After preparing and verifying test models, passing test cases in TestRail, the QA engineers got down to testing.

First, the a1qa engineers performed sanity checks after receiving software builds to ascertain that the proposed functionality worked roughly as expected. In case of failure, the builds were rejected, and the bottlenecks should have been eliminated before passing the builds to QA teams to perform more rigorous testing. As there could have been 20 builds over 1 day, a1qa introduced a rule for software developers to fulfill sanity checks on their own using the list of a1qa’s verifications and only then pass the builds to further testing.

a1qa plans to automate all sanity checks to optimize QA processes and free up the time of engineers to execute other high-priority project activities.

In addition, the a1qa engineers performed smoke, new feature, regression testing, and defect validation.

These combined efforts helped spot software issues, ascertained that recently added functionality didn’t affect product logic, and ensured the faultless operation of previously introduced improvements.

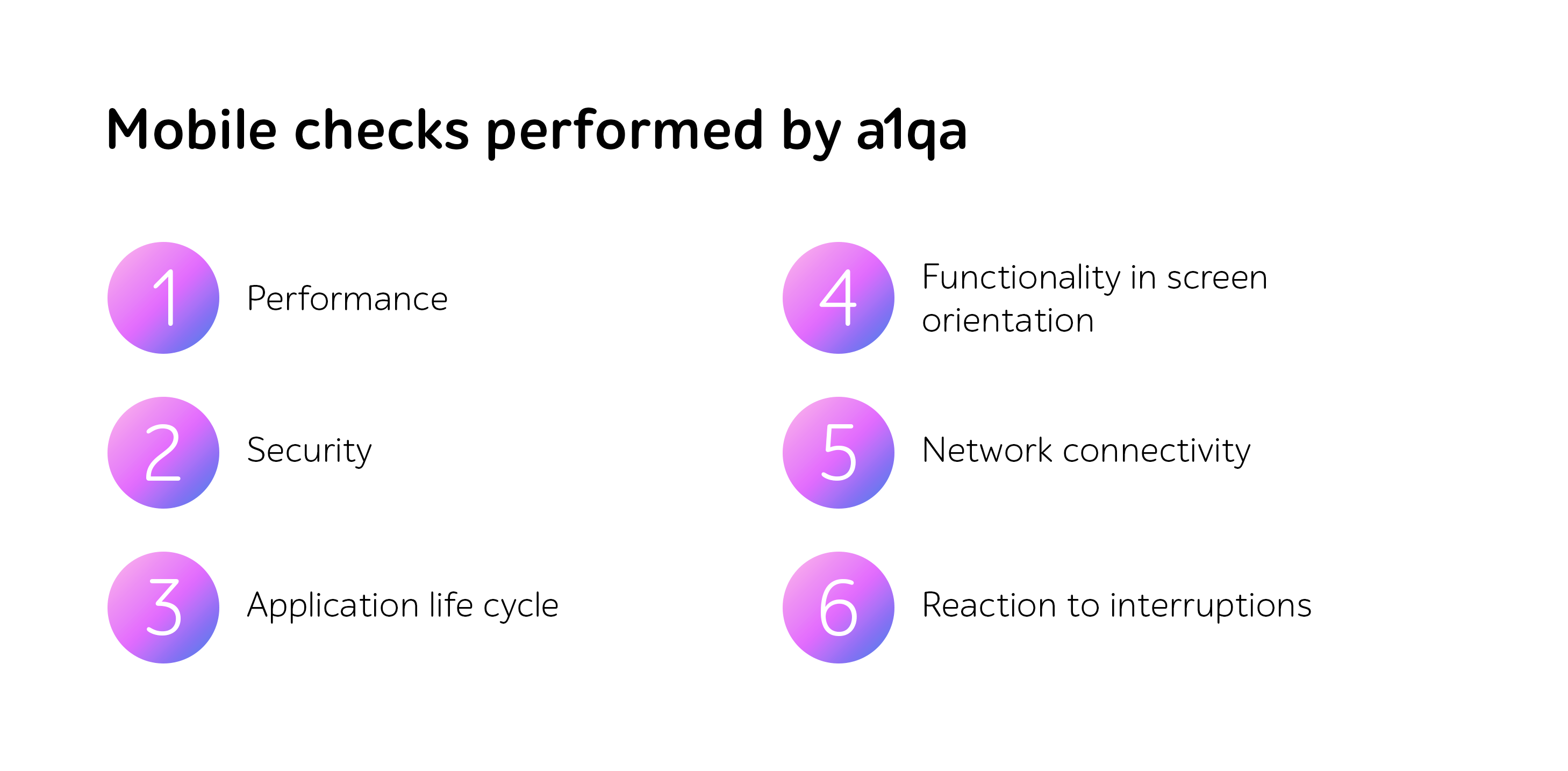

The engineers performed testing on real devices bought by a1qa specifically for the project to provide the most true-to-life environment that mirrors user’s experience and increase testing accuracy. The specialists followed a comprehensive list of checks to verify the following:

Apart from software testing, the QA manager must continuously analyze the coverage to ensure its completeness. So, they always stay abreast of the devices’ scope necessary to perform relevant tests. In the event of project rotations or the release of new phones, the a1qa manager handles the necessary purchases. Once acquired, the devices are distributed to the appropriate teams. If needed, the devices are sent to the updated locations of QA engineers to ensure smooth operations.

To assist the client in capturing a target audience that utilizes various devices, the QA experts fulfilled compatibility checks. They helped guarantee seamless software operation across diverse platforms and their versions for both iOS and Android, as well as on a range of devices that have different screen sizes and resolutions, CPU, GPU, memory, and other technical parameters. Our team also takes care of releases for different distribution services, such as Google Play, Apple App Store, Amazon App Store.

During testing, a1qa uses the client’s data to identify the top 10 most commonly used devices for payments on each platform. These lists are updated every three months to ensure thorough testing coverage.

In addition, some devices become outdated over time or new devices enter the market and have core specific features that differ from those in current devices. In such cases, the engineers review the lists every 6 months and acquire new devices.

Providing a consistently great user experience to clients globally means everything when it comes to increasing market presence. To accomplish this, a1qa performed localization testing. The client achieved successful software adaptation for international markets, including the EU, the USA, Canada, Australia, and parts of Asia, due to testing important elements such as correct layouts of data, cultural aspects, etc. a1qa ensured that all the features were designed in accordance with the target country or region.

To optimize laborious, time-consuming verifications, expedite software deployment, and ensure regular release cadence, a1qa automated part of the front- and back-end testing activities.

The client wanted to automate the testing of 3 mobile applications that were created using a Unity engine, which put limitations on testing UI elements, as it was impossible to interact with them.

The client initially used the open-source AltUnity testing tool with Appium to automate tests by sending requests to the application build and retrieving data about UI elements. However, due to security concerns, they developed an in-house tool based on WebSocket Protocol. This tool established a secure connection and enabled smooth data transfer by embedding a special plug-in into the build to access the required elements. Additionally, the client’s engineers created a solution to list all on-screen elements, allowing testers to select the specific element to interact with.

Within these activities, a1qa’s QA automation engineers focused on investigating the capabilities of the open-source test automation solution. Their goal was to provide a comprehensive comparison with the in-house tool, aiding in the decision-making process to select the most suitable option.

a1qa’s other high-priority responsibilities include:

To verify the quality of the back-end, the a1qa team automated regression testing of APIs for both the new mobile application, which was not yet released, and the existing solutions available in stores, all of which shared a single back-end.

The novel application was developed based on a microservices architecture. To confirm that APIs sent requests correctly and returned the right response, QA automation engineers prepared Java-based automated scripts and wrote a customized test automation framework.

As for the existing mobile applications, the client initiated the process of their reengineering from a monolithic architecture to a microservices-based one.

This part of the back-end was complicated and involved diverse admin panels and services offering extra functionalities, such as uniting end users into segments or managing user accounts. Modernization process wasn’t streamlined, therefore, new APIs for automated testing appeared not very fast.

Nevertheless, to avoid wasting time and to add value from the start of the re-architecturing activities, a1qa’s QA automation engineers assisted the client in defining the test automation scope. They developed automated tests to ensure the quality of the legacy back-end and maintained these tests to prevent stability issues, particularly as testing involved UI elements.

While testing both new and current applications, a1qa’s specialists configured integration with Allure Report and TestRail to effectively manage test cases, establish automated monitoring of results, and get real-time insights into the QA process. To ensure continuous quality assessment, tests were run 4 times a month.

To help the client improve cost-efficiency, a1qa’s QA automation engineers in cooperation with the client’s specialist investigated the probability of introducing AI-powered coding assistance within both front-end and back-end quality control activities.

The first investigation concerned the introduction of an AI-driven tool for automated code review of pull requests. It was a pivotal need as due to project rotations, newcomers constituted most of the test automation team. Therefore, few specialists within a team could conduct this review, and the process was too time-consuming. By leveraging AI for the initial review, a1qa could streamline it, deliver automated tests and software updates quicker, as well as free up the time for senior engineers that could be spent on other priority tasks.

After a thorough analysis, a1qa and the client decided on Code Rabbit, as it can track the history of all commits across the entire code branch before a pull request, thus providing more accurate, context-specific technical comments on code quality after any changes. The use of Code Rabbit on the project has passed the first stage of approval, and now the client’s QA director will pitch the idea of using this tool to their AI department.

The second key investigation concerned the choice of an AI instrument for decreasing the time of automated test development, as due to high software complexity the average indicator was 8 hours.

As a part of the selection process, a1qa dedicated time to risk management. By understanding the internal project requirements as well as the client’s concerns and expectations, a1qa identified areas of concern and highlighted them for further discussion with the client and resolution.

To be precise, the client is most concerned about data security. When using AI, no one can give a 100% guarantee that data applied to train LLMs won’t appear in some other sources (even despite the tool’s regulations on non-use of data). Therefore, to prevent leakage of data with the names of features that aren’t released yet, a1qa suggested renaming them and creating an internal vocabulary. Therefore, even theoretical risks of AI implementation were mitigated during the preparation stage.

After concluding the research, a1qa suggested leveraging GitHub Copilot. Its introduction within test automation turned out to be highly effective. Development time of an automated test was reduced by 28%, while in a nine-month span the QA team saved 788 hours that could be compared with the implementation of an additional 225 automated tests.

Furthermore, a1qa is using GitHub Copilot in other test automation activities, such as creating supplementary documentation, and is planning to collect insights on its effectiveness in the future.

Striving to improve customer experience, gauge software ability to handle a high influx of end users, and ensure its brisk operation, the client initiated both server- and client-side performance testing.

Through client-side verifications, the QA engineers assessed the apps’ ease of use, compliance with the stipulated requirements, defined issues that affected CX, and introduced a range of improvements.

As for server-side checks, these included all major types — from stress to volume testing, the team was able to guarantee that after migrating the back-end of mobile applications to the cloud it would be able to withstand the desired load with no detriment to quality.

In addition to testing, the QA engineers set up a system of performance monitoring (e.g., dashboards in Grafana, New Relic) to:

Additionally, over the past year a1qa’s team has fully automated performance testing verifications, which helped accelerate QA process and ensure earlier detection of performance bottlenecks.

It was important for the QA team to make sure that diverse components and modules remain fully compatible and don’t pose any risks to correct software operation.

The QA engineers fulfilled integration testing by ensuring that multiple software parts harmoniously blend in with one another and no error probability exists.

Verifying integrations helped confirm that users can easily log in to the application through social networks such as Facebook.

While performing the aforementioned activities, the QA engineers stored every identified problem in the ‘project error log.’ At least once a month, the experts view the data, discuss potential issues to address and the results of fixing of any previous concerns.

During the 9+ years of cooperation, a1qa’s team completed over 600,000 tests.

Due to the well-configured communication channels between dedicated teams and streamlined interaction processes with the client’s developers, QA engineers could reliably ensure strict compliance with all deadlines. All detected defects were fixed before 600 software updates went live.

The project continues to this day. The customer has appreciated the responsible approach provided by the a1qa team and is glad to proceed with continued cooperation.